On the Road to Transparent AI

Moritz Helias from Forschungszentrum Jülich is working with an interdisciplinary team to shed more light on the "black box" of AI. In an interview, he explains exactly what his project will be about.

© Forschungszentrum Jülich

/FZJ/ Artificial intelligence (AI) surpassed humans in chess, poker, and Go years ago. They are just as good – and sometimes even better – at analysing x-rays and share prices as an expert made of flesh and blood. But even the most accurate AI-based analyses have one flaw, and that is that to date it is often barely possible for a person to understand how they come about. As part of a new project, an interdisciplinary team is now working on shedding more light on AI’s “black box”. Moritz Helias from Forschungszentrum Jülich is coordinating the project, which is receiving € 2 million in funding from the Federal Ministry of Education and Research.

Forschungszentrum Jülich (FZJ): Professor Helias, it is often not clear to a layperson how even conventional computer applications work. Why is it that the lack of transparency is currently a problem in AI?

Usually traditional applications have a very precise definitions of what information is saved where and how this information is used in the course of computations. Some of these models are very complex, weather and climate models, for example. But regardless, it is possible to track the computations very precisely step by step. You can precisely gauge what impact it will have if you change this or that parameter.

It’s different with AI applications. Usually these are actually like a black box. Especially when it comes to deep learning, where the information is ultimately saved in millions of trained parameters. These applications are based on artificial neural networks, which are modelled on the natural neural networks in the brain. These artificial neural networks consist of multiple layers of interconnected artificial neurons and “learn” from large data sets to derive a particular result or prediction from an input. The information here is not saved in a particular place, but instead consists in the strength of the connection between artificial neurons. No-one can say as yet exactly which elements from the data ultimately lead to a decision.

FZJ: Where in practice would more transparency be desirable?

In truth the lack of transparency in many areas is not actually a problem, for example when you’re dealing with suggesting certain products online, understanding voice commands, or sorting collections of photos on your PC. However in some sensitive areas, you really want to have some insight and understand how the decisions come about.

That is especially important in medicine, of course, for example to better understand the reasons why an application with AI decides in favour of or against surgery. The same also applies of course to assistance systems with AI that are involved in operations and support the surgeon.

But transparency is also of fundamental importance: You can only correct specific mistakes that an AI makes if you know what causes them. Sometimes methods involving artificial intelligence make very obvious mistakes; one familiar example might be incorrectly interpreting images. Errors like these can have major consequences, for example if they occur in self-driving cars, or if the AI is fooled into failing in an access control check.

FZJ: How do you want to create more transparency in your new research project?

At its core, the project draws on the renormalization group, from which it takes its name “RenormalizedFlows”. The method counts among the greatest achievements in theoretical physics of the 20th century. It was specially developed to analyse processes that result from the interaction of a number of subprocesses. That is why a group of physicists with many years of experience in this field, headed by Carsten Honerkamp from RWTH Aachen University, are involved in the project. There are also experts from several other disciplines. These include neuroscientists and medical researchers headed by Tonio Ball from the Medical Center of the University of Freiburg on the one hand, but also computer scientists headed by Frank Hutter, one of the world’s leading experts on structure optimization for neural networks on the other hand. The project gets a strong practical orientation through the medical diagnostic hardware and software solutions from Frank Zanow and Patrique Fiedler of the participating company eemagine Medical Imagine Solutions GmbH.

FZJ: What exactly is your approach?

Moritz Helias, RWTH Aachen University © Peter Winandy

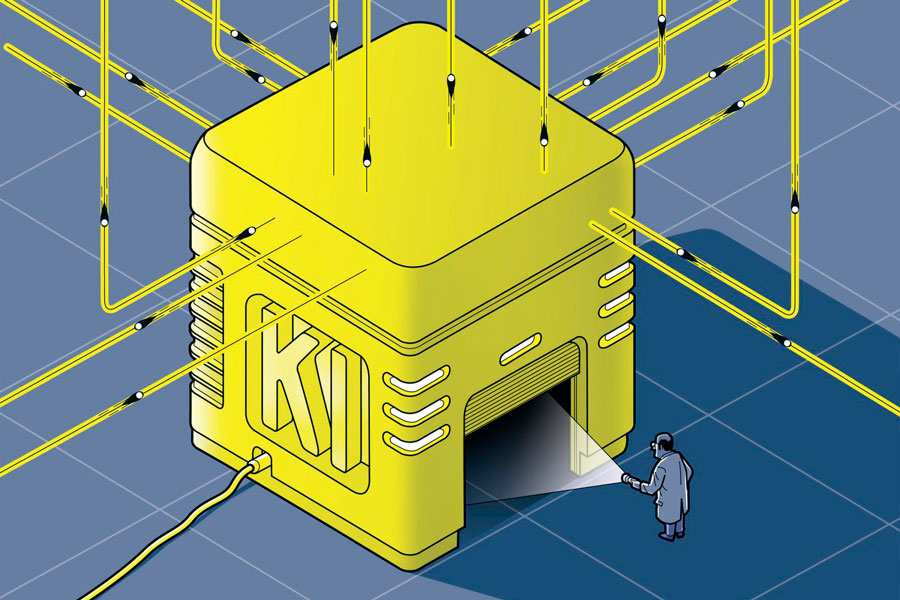

Deep neural networks consist of many layers of neurons connected in series. Data that are entered are parsed in individual steps in these layers. But this parsing is so complex that it is not easily comprehensible to a person.

In the project, we want to combine the renormalization group that I mentioned before with another, relatively new development: invertible deep networks. These networks allow the underlying causes in the data to be made visible and the entire process to be inverted. This makes it theoretically possible to compute the most plausible data that appertain to a particular result or cause. That is hugely important. This means that you get decisions, whose cause can be examined immediately, making them comprehensible to people.

FZJ: What applications are you focusing on?

Our project is mainly focused on basic research. Central to this is the analysis of electroencephalography data (EEG). To this end, we are working together with people like Tonio Ball from the University of Freiburg, who sees AI systems as potential aids for detecting diseases such as epilepsy.

However, EEG signals also play a role in controlling prosthetics. AI systems help to convert brain signals into control commands. To make sure that this works not just in the lab, but in day-to-day life, you have to deactivate some specific sources of errors. For example, light switches can cause very similar signal patterns to the brain signals you use to control prosthetics. To prevent operating a switch from causing a malfunction, these noise signals have to be precisely eliminated. This is another one of the project’s goals.

More information

Pictures may be used for editorial, event-related reporting provided the source is cited.