How the brain rewires itself during learning

Researchers at the Bernstein Center in Freiburg develop a new model to understand plastic processes in the brain's networks

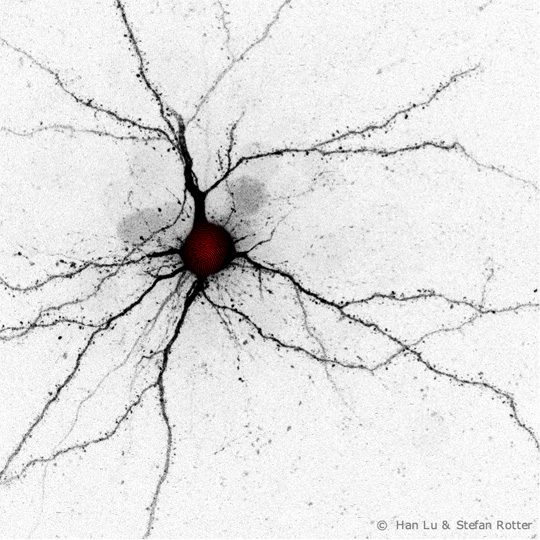

The research team uses experiments to determine a temporal sequence of synaptic degradation and formation processes in the brain, which they consider a strong indication of the accuracy of their new network model, analogous to a “fingerprint.” Photo: Han Lu, Stefan Rotter

Our brains change their material structure when we learn – this has long been known. This adaptability, also called plasticity, gives us enormous advantages and is often enough essential for survival. But what exactly happens in the brain during this process? What neuronal mechanisms and functional principles come into play here? Two research teams led by Prof. Dr. Stefan Rotter of the Bernstein Center at the University of Freiburg have now proposed a new variant of learning networks and demonstrated their performance using computer simulations. The new model is based on the widely confirmed experimental observation that nerve cells are constantly rewiring. According to the scientists, this happens all the time – even in mature brains, and to a much greater extent than most textbooks suggest. Some predictions of a model based on this assumption were now confirmed in experiments conducted on biological brains.

Every nerve cell has an eye on itself

“It is crucial how nerve cells in the brain communicate with each other, how they connect with each other with the help of synapses and form larger units,” says Rotter, professor of theoretical and computational neuroscience at the Faculty of Biology. As early as 1949, psychologist Donald O. Hebb postulated that “neurons wire together if they fire together” – which became known as Hebb’s learning rule. Until now, it has been assumed that the key to this process lies mainly in the electrochemical fine-tuning of pre-existing synapses, where the regulation of a synapse depends on the activity of the two nerve cells involved. And the control signal for this, a form of correlation, must be calculated separately for each individual pair of nerve cells.

Together with Dr. Júlia Gallinaro and Nebojša Gašparović, Rotter has now developed a concept that is significantly less complex and shows more robust learning behavior. The starting point: Each nerve cell first of all only has itself in mind – with the goal of maintaining its own activity level. In doing so, it functions like a thermostat in which “temperature” is replaced by “activity,” according to Rotter: If the activity of a nerve cell is too high, the number of its own incoming and outgoing, excitatory synapses is reduced. If its activity is too low, new excitatory synapses are created. “Homeostatic control,” Rotter calls this self-regulation.

Neurons contact other neurons who are ready for it

The trick is that the elimination of existing contacts and the formation of new ones in the cellular network occurs at random. When neurons search for new partners after the end of a stimulation to maintain their activity level, they inevitably encounter the neurons with which they were previously stimulated together – and which are now also looking for new contacts. In this way, it happens quite casually that neurons that fire together also eventually wire up with each other – and without any further need for regulation.

In computer simulations, this model works very well, says Rotter: “A number of learning paradigms known from psychology, such as classical conditioning or associative memory, can be implemented straightforwardly with this rule.”

Rewiring the network

Direct experimental testing of this model, which is based on constant rewiring, is not possible with current technology – yet a second research paper provides strong evidence that it is correct. It was presented by Dr. Han Lu, Júlia Gallinaro and Stefan Rotter together with Prof. Dr. Claus Normann from the University Medical Center Freiburg and Dr. Ipek Yalçın (Centre national de la recherche scientifique and Université de Strasbourg/France).

Here, too, the scientists first developed a computer simulation that shows a massive rewiring after stimulation of nerve cells, combined with a reorganization of their entire “social” network. The researchers then used so-called optogenetic stimulation in the brains of mice in the laboratory. In this process, artificially photosensitized nerve cells are repeatedly stimulated with light. Finally, they compared the measured changes in the animals’ brains with the results from computer simulations.

Experiment shows “fingerprint” of the model

And indeed, the measurements performed can be interpreted as showing that stimulation of the nerve cells in the mice brains initially leads to a breakdown of synapses – presumably because the cells were responding to the increased excitation level – and then, after the end of the stimulation, to the rebuilding of connections of the previously jointly stimulated cells. Not only that: “We observed exactly the same characteristic time course in this process that we had previously seen in the computer simulation,” Rotter says. The team considers the sequence of synaptic degradation and buildup processes, analogous to a “fingerprint,” as a strong indication of the correctness of their model.

The structure of the network changes and function adapts without forgetting everything previously learned: Rotter says the new model could help us better understand this fascinating process. “I think future research should focus much more on structural plasticity,” he says. “Very little has been done so far, probably because of the technical difficulties associated with such experiments.”