Estimating the pace of change

An international team of researchers from Tübingen and Cold Spring Harbor (New York) has found a pioneering way of determining at what pace changes typically happen. The new method avoids previous systematic errors in estimating timescales, for example of neural activity in the brain. The results are now being published in the journal Nature Computational Science; first applications of the method to neural recordings from the visual cortex highlight it as a powerful tool for neuroscience and many other disciplines.

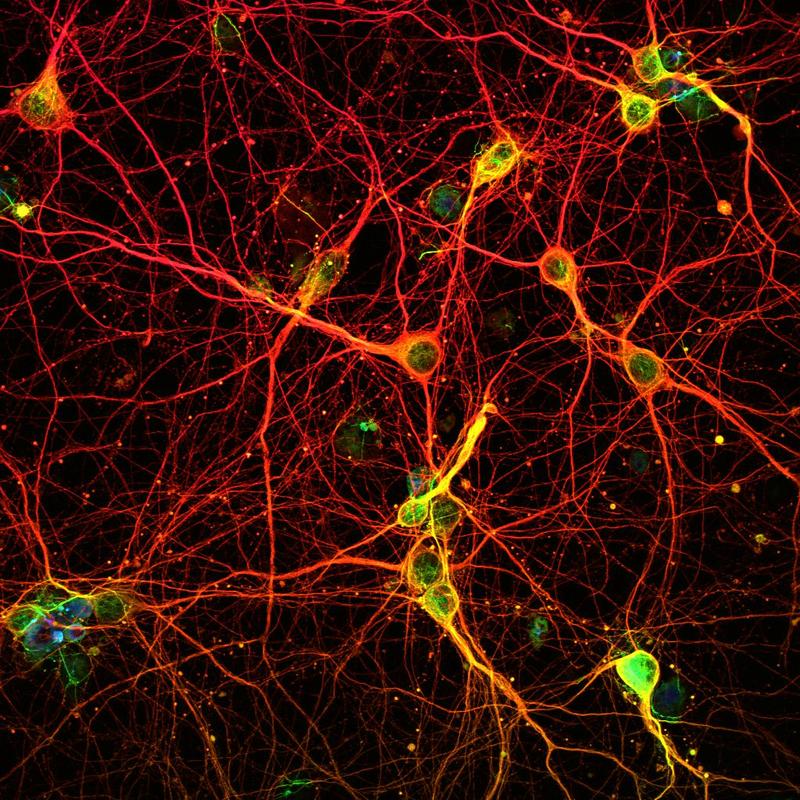

Neurons in a mouse cortex. ALol88 via Wikimedia Commons, CC BY 4.0

Bernstein members involved: Anna Levina, Roxana Zeraati

Each process in nature happens at its own pace: neurons take milliseconds to signal to each other, demographic changes occur over years, and climate change is a matter of decades or even millennia. The period that a process typically needs to undergo a change is known as its timescale, and understanding the timescale is paramount for a deep understanding of the process itself.

How long does change typically take?

Statisticians make this idea of a timescale very precise. In the example of neural processes, the state of your brain right now is influenced by the state some time ago. The extent of this influence is captured by the temporal correlation. This correlation decreases over time: the longer the elapsing time, the weaker the dependency. The timescale of your neural processes is just this: a number that indicates how fast this correlation typically decreases and thus how fast the brain forgets its state.

In practice, determining the timescale from empirical data can be tricky. Typically, one would calculate correlations of the measured brain activity at different times; the decreasing dependency then indicates the timescale.

Recreating the cake to determine its sugar content

“Unfortunately, this method of determining timescales is flawed and can create misleading results,” explains Roxana Zeraati, researcher at the University of Tübingen and at the Max Planck Institute for Biological Cybernetics. “The problem is that the empirical data is always measured over finite, often short, time. Because of this, the average dependency between what happens at different points in time is systematically underestimated.” Zeraati stresses how important a correct measurement of timescales is: “A lot of conclusions in neuroscience rely on precise estimates of timescales. To give just one example, we think that aberrant timescales are linked to autism. But people never realized before that they might be estimating timescales incorrectly.”

This is why Zeraati and her collaborators came up with a novel idea to determine timescales: the scientists propose to generate new, artificial data from a computer model that match closely the empirical data. Anna Levina, assistant professor in Tübingen and Zeraati’s PhD advisor, explains the method with a simple analogy: “If chemically analyzing the sugar content in a cake your grandmother made is tricky – possibly because sugar from the frosting seeps into the cake and messes with your measurements – you can instead try to recreate the cake several times with different amounts of sugar, and the cake that comes closest to the original tells you how much sugar was in your grandmother’s, precisely because you know how much sugar you used for your cakes.”

Analyses of memory

The researchers subsequently tested the potential of their new method on data from neural recordings of the visual cortex from a previous study. “We wondered if spontaneous fluctuations of neural activity were ruled by a single timescale, or if maybe the process we observed was the sum of several processes with different timescales,” says Levina. “In that particular case, we saw that the process in the brain involved two different intrinsic timescales – something that never before has been reported.”

Zeraati adds: “In a similar way, we can analyze almost any intrinsic process in the brain, for example how neurons remember the past. This makes our new estimation method an incredibly valuable and powerful tool for neuroscientists and other researchers.”