An artificial baby learns to speak – AI simulations help understand processes in the early childhood brain

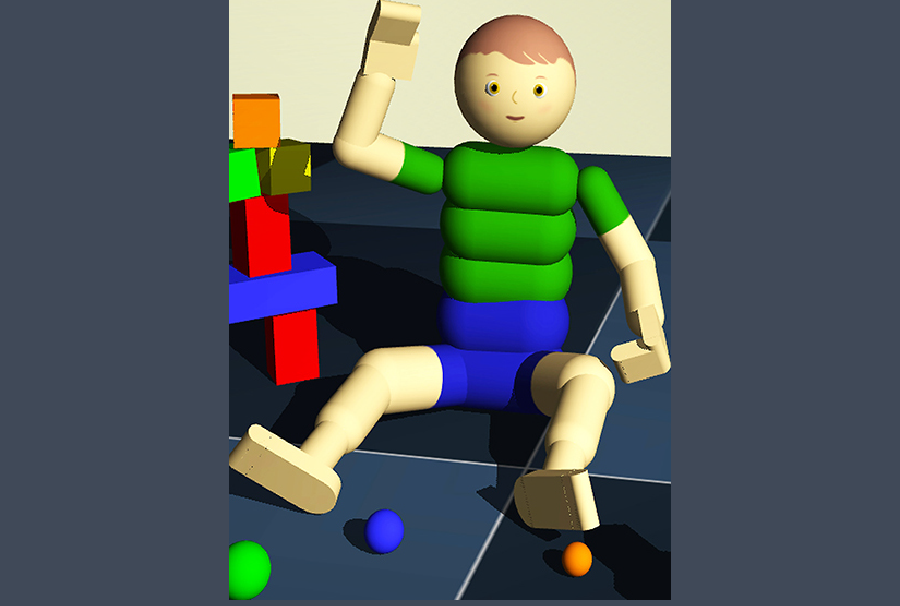

A simulated child and a domestic environment like in a computer game: these are the research foundations of the group led by Prof. Jochen Triesch at the Frankfurt Institute for Advanced Studies (FIAS). It uses computer models to find out how we learn to see and understand—and how this could help improve machine learning.

A set of images simulates a toddler playing and moving around with toy models. The child occasionally “hears” comments from the caregiver. Image: Dominik Mattern et al., Journal of temporary stand-ins, (2024) https://ieeexplore.ieee.org/document/10382408

Bernstein members involved: Gemma Roig, Jochen Triesch

How does a toddler learn to recognize objects? How do they learn to distinguish a banana from a car, but also to classify different cars—colored, in all sizes, shapes, and abstractions—as such, and later even name them? To do this, our brain must link knowledge about appearance with many other contexts and with the name of the object.

In their second year of life, children begin to understand and assign meanings to words. Objects and language play an important role in this process. Triesch and his team are imitating this learning process in a kind of computer game: in a “living room” with several objects, a “child” learns about different objects that a caregiver names in a simulated dialogue.

The Triesch working group has teamed up with Prof. Gemma Roig’s team at the Institute of Computer Science at Goethe University to develop a computer model that mimics how an infant learns: the appearance of a single object, an entire category of objects, the associated term, and the context. A computer graphic simulates the visual experience of a toddler in a virtual apartment. Triesch’s postdoc Arthur Aubret programs the environment, the actions, and the experiments. This allows him to systematically vary influences, such as whether the caregiver says something often or rarely.

“Often, when the caregiver says something, the child is not paying attention to the object at all,” says Triesch. Researchers have found that about half the time, the child is looking at something else entirely. Aubret has transferred this to the computer model. Nevertheless, the learning process works. It is much more complex than one might think at first glance: The child explores the object from different angles to learn about its three-dimensionality—in other words, to remember what a car looks like from the front, bottom, top, and side. In addition, an object with the same name can be made of different materials—metal, plastic, fabric, wood—and vary in shape and size. The brain must therefore create a classification system—in this case, using the generic term “car.”

A child learns what a toy car looks like in space by exploring the toy from different angles.

Fotos: R_yuliana/Shutterstock

Pigeonholing is important for the brain

“It’s fascinating that the amount of information in a picture can be so enormous,” explains Triesch. For one thing, there may be other things in the field of vision, such as toys or furniture. And while the child is playing, the caregiver may comment occasionally – “oh, how nice, there’s a ball” – but often talks about other objects as well. According to the researchers’ findings, this does not seem to interfere with the learning process. Interaction is important, but if attention is focused on the wrong object here and there, this does not have a strong negative impact. “And more attention, i.e., constantly commenting on everything, does little to promote the learning process,” says Triesch.

The scientist is particularly fascinated by the concepts that form in our minds: the classification of similar things, things that belong together spatially or temporally, is clearly more demanding than what artificial intelligence has been able to achieve so far. The brain also links a flexible appearance with a word, thus laying the foundation for language and communication. Peter Bichsel illustrated how important this is for our socialization over 50 years ago in his short story “A Table Is a Table.” In it, a lonely man invents his own names for things and becomes an outsider as a result.

The learning brain of a toddler links neighboring things as similar and connects seeing (and feeling, smelling) with hearing: The German word “be-greifen” (to grasp) describes this process quite vividly. “Our computer model is not an exact representation of reality,” Triesch qualifies, “but the mechanisms adequately reflect the processes.” They recreate the interactions that lead to the corresponding connections. And they allow us to understand how these processes work in the brain.

In addition, the brain recognizes higher structures, such as the fact that certain devices belong in the kitchen or bathroom. A fork and a plate have the same context for us: they belong in the kitchen. When our brain relates objects to each other based on their meaning and function, this is called a “semantic relationship.” But how does our brain learn these connections?

“The computer model stores the connecting relationships between objects, such as kitchen or bedroom, in higher layers of the network, similar to how humans do,” writes the team of authors. The identity or category of an object, on the other hand, is better reflected in lower layers. This multi-layered model mimics the hierarchical information processing of the visual cortex: Early layers correspond to early stages of processing in the brain, while later layers correspond to higher-level structures. Both the computer model and the human brain use two complementary learning strategies for this purpose: The linking of images and language ensures that different objects in the same category are represented similarly.

Developing modern AI from the human learning process

“With this knowledge, we could build computers that learn more autonomously – just like a toddler,” hopes Triesch. Until now, AI has been based on vast amounts of data stored on the internet, which has now been almost exhaustively evaluated. This passive learning from data differs from the active learning of a toddler, who develops a complex understanding of the world from a small amount of information, their own observations, and comments from caregivers. It not only stores the appearance of an object, but also categories and contexts together with the corresponding word. Triesch sees this as a basis for the development of future generations of robots that, like children, learn to navigate their world and communicate with us about it.

Author: Anja Störiko/FIAS, Source: Forschung Frankfurt 1.25